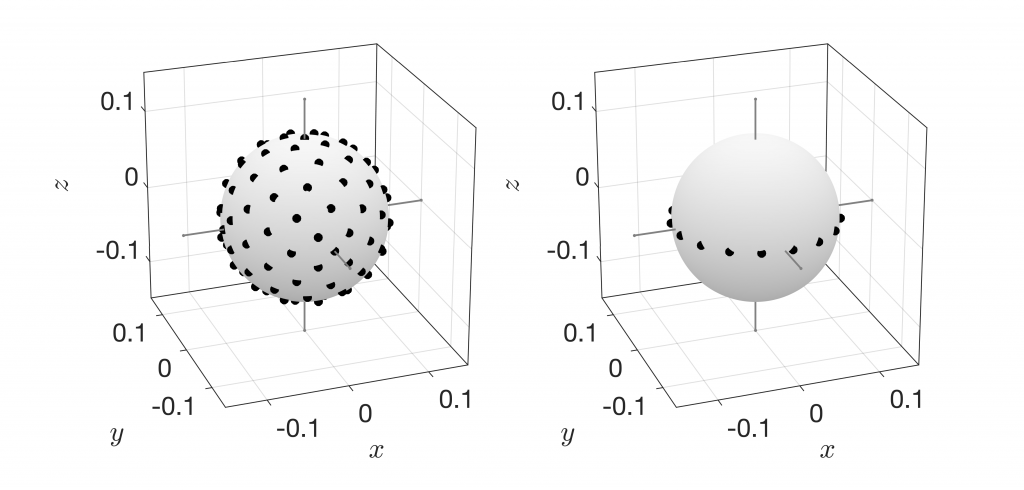

You might recall our work on equatorial microphone arrays, EMAs, (which are composed of a spherical baffle with microphones along its equator) that we highlighted in this post and demoed in this post. Such a setup needs only 2N+1 microphones to produce an ambisonic representation of Nth order. The 16-channel array from the demo video (cf. the image below) produces therefore 7th-order ambisonic signals.

16-channel EMA created by the Audio Communication Group at TU Berlin

We figured that it may not be obvious to everyone how the signal processing would need to be implemented to produce ambisonic signals that are compatible with software tools like SPARTA or the IEM Suite. This entails actually an intermediate real-valued circular harmonic decomposition and other stunts that are not obvious from the original publication. We therefore provide MATLAB scripts that implement the ambisonic encoding of the raw microphone signals (and the subsequent binaural rendering, too, for that matter) here:

https://github.com/AppliedAcousticsChalmers/ambisonic-encoding

The raw microphone signals from the video are also included as an example case as well as pre-rendered binaural signals and a Reaper project that allows you to experience the binaural rendering with head tracking if you happen to have a tracker available. There is plenty of pdf documentation, too.

To be comprehensive, we also provide the whole spiel for conventional spherical microphone arrays (SMAs).